Introducing Velda: Cloud Development That Actually Feels Local

Published on July 30, 2025 by Chuan Qiu • 4 min read

Simplify cloud development with instant access to GPU clusters, AI training resources, and high-performance computing - without the complexity of containers or Kubernetes.

The Cloud Development Problem: Complexity vs. Cost

AI developers and teams running compute-intensive workloads face a painful dilemma: either deal with the complexity of containers, clusters, and resource tuning, or pay thousands monthly for always-on powerful instances that sit idle during development.

Need GPU power for AI training? You need 8 H100s for a few hours. Processing massive datasets? Maybe 64 CPU cores. Building and testing applications? High-memory instances. But current cloud solutions force you to choose between:

• Expensive idle time: Provision powerful instances that waste money during development downtime

• Kubernetes complexity: Navigate container orchestration just to run a simple test, script, or service

• Resource queues: Wait in long queues on shared computing clusters

• Container overhead: Deal with registry management and dependency mismatches

Whether you're training neural networks, processing massive datasets, or running distributed builds, there has to be a better way.

Velda's Vision: Cloud Computing That Feels Local

We believe running compute workloads in the cloud should be as simple as running them locally.

Imagine having instant access to any compute configuration — from GPU clusters for AI training to high-CPU instances for data processing to massive memory for analytics — but only paying for the exact compute time you use.

No cluster management. No container overhead. No idle costs.

How Velda Simplifies Cloud Development

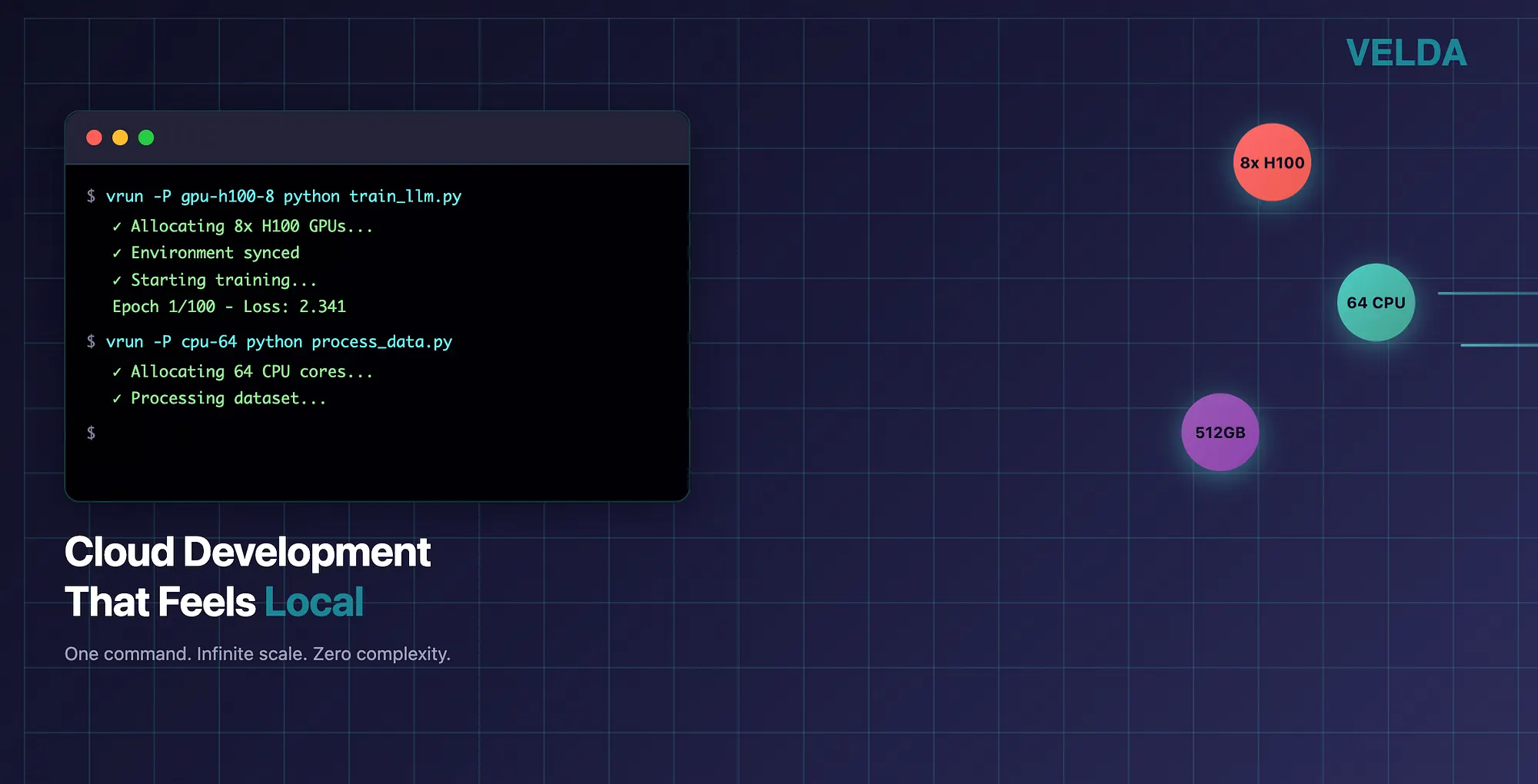

One command prefix changes everything. When developing AI models, processing data, or running any compute-intensive application on Velda, simplicity is key. The vrun command is all you need to scale from your dev environment to any compute configuration:

# AI model training with 8 H100 GPUs

vrun -P gpu-h100-8 python train_llm.py

# Large-scale data processing with 64 CPUs

vrun -P cpu-64 python process_dataset.py

# GPU-accelerated fine-tuning with A100s

vrun -P gpu-a100-2 python fine_tune.py

# Parallel builds with 32 CPU cores

vrun -P cpu-32 make -j32 build_projectBehind the scenes, Velda automatically:

• Allocates resources instantly: GPUs, CPUs, and memory on-demand

• Shares your environment: Libraries, dependencies, datasets, and configs travel with your code

• Streams real-time output: Logs and results appear as if running locally

• Auto-shutdown: Expensive resources terminate automatically when processes complete

You'll hardly notice your workload is running on different compute instances. No Docker images, Kubernetes manifests, or resource tuning required.

How Velda Compares to Existing Solutions

Velda vs. Kubernetes: Simplicity Without Sacrifice

While Kubernetes offers powerful orchestration, it comes with significant complexity overhead:

Kubernetes challenges: • Complex cluster setup and management requirements

• Container dependency conflicts and version mismatches

• Registry cleanup and image management overhead

• High latency from build/push/pull cycles

• Auto-scaling configuration complexity

Velda's advantage: • Zero setup per workload — just use vrun

• No container issues — your environment travels with your code

• Instant execution — no image build/push/pull cycles

• Smart resource allocation — minimal scheduling overhead

• Built-in auto-scaling — no complex configuration needed

Velda vs. Slurm: Cloud-Native by Design

Traditional HPC schedulers like Slurm work well for research clusters but fall short in modern cloud environments:

Slurm limitations: • Batch-only focus: Primarily designed for batch jobs with limited service support

• No load balancing: Lacks native load balancing capabilities

• Manual scaling: Static resource allocation requiring manual intervention

• Single-cluster limitation: Typically tied to single on-premises clusters

• Shared environments: No isolation between users

Velda's cloud-native approach: • Full service support: Services and load balancers included

• Auto-scaling architecture: Cloud-native with automatic scaling

• Multi-cloud compatibility: Works seamlessly across cloud providers

• Kubernetes integration: Can leverage existing Kubernetes backends

• Isolated environments: Customizable for every developer

Use Cases: What You Can Build with Velda

Transform complex workflows into simple commands with Velda:

🤖 Machine Learning & AI Development

• Train models with any number of GPUs without hardware constraints

• Run inference at scale with automatic resource allocation

• Experiment freely with different model architectures

📊 Data Processing & Analytics

• Start Ray clusters for large-scale data pipelines

• Process massive datasets that exceed local machine capacity

• Run distributed computing workloads seamlessly

🛠️ Development & Testing

• Build on powerful machines without local hardware limits

• Distribute build actions across compute clusters

• Performance test at scale with real-world resource allocation

🏗️ Multi-Service Applications

• Deploy distributed services across multiple machines

• Test complex architectures in realistic environments

• Scale components independently based on demand

👥 Team Productivity

• Instant developer onboarding with cloned environments

• Eliminate idle costs during coding sessions

• Standardize development environments across teams

Enterprise-Ready Cloud Development

Velda is built for teams and organizations that demand:

• 🔒 Security: Everything runs in your cloud environment

• 🎛️ Control: No data leaves your infrastructure

• 💰 Cost Efficiency: Use existing cloud credits and enterprise discounts

• 🔄 Flexibility: Multi-cloud and on-premises support

• 🔗 Integration: Ready for enterprise workflows and existing toolchains

Get Started with Velda Today

Velda is available as open-source and enterprise solutions today. Hosted option coming soon.

🚀 Ready to Experience Velda?

Book a demo now and see firsthand how cloud development can feel as natural as local development.

Visit velda.io to get started and discover how cloud development can finally feel local.

📧 Stay Updated

Follow us for the latest updates: